Tesla has released its latest Autopilot safety report, and the limitations are still presented misleadingly; however, one clear thing is that the data is worsening.

Tesla notoriously doesn’t release any relevant data to prove the safety of its ADAS systems: Autopilot and Full Self-Driving (Supervised).

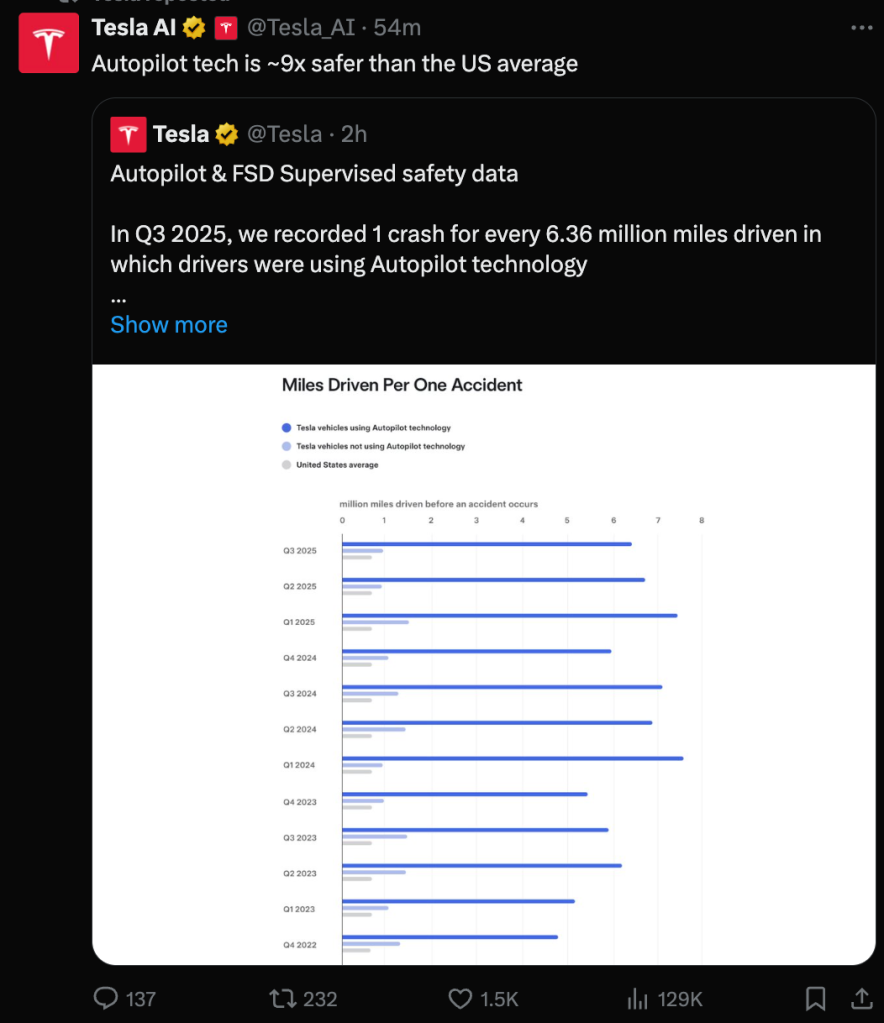

The only thing the automaker releases is its quarterly “Autopilot safety reports”, which consist of Tesla releasing the miles driven between crashes for Tesla vehicles with Autopilot features turned on, and comparing that with the miles driven by vehicles with Autopilot technology with the features not turned on, as well as the US average mileage between crashes.

There are three major problems with these reports:

- Methodology is self‑reported. Tesla counts only crashes that trigger an airbag or restraint; minor bumps are excluded, and raw crash counts or VMT are not disclosed.

- Road type bias. Autopilot is mainly used on limited‑access highways—already the safest roads—while the federal baseline blends all road classes. Meaning there are more crashes per mile on city streets than highways.

- Driver mix & fleet age. Tesla drivers skew newer‑vehicle, higher‑income, and tech‑enthusiast; these demographics typically crash less.

With all these flaws in Tesla’s quarterly Autopilot safety reports, the primary value lies in comparing the miles between crashes with Autopilot features turned on over time.

As we previously reported, even this remains problematic, as Tesla stopped reporting the data for over a year. When it resumed reporting last year, it edited the previously released data.

However, there are reasons to believe Tesla’s data now, as it doesn’t look good for the company.

Here’s Tesla’s latest report for Q3 2025:

In the 3rd quarter, we recorded one crash for every 6.36 million miles driven in which drivers were using Autopilot technology. For drivers who were not using Autopilot technology, we recorded one crash for every 993,000 miles driven. By comparison, the most recent data available from NHTSA and FHWA (from 2023) shows that in the United States there was an automobile crash approximately every 702,000 miles.

It’s now the third quarter in a row where Tesla had a year-over-year decline in mileage between crashes:

The data deteriorated enough that Tesla had to give up its misleading claim that “Autopilot is safer than human by 10x” and now says “9x” instead:

The comment is still misleading for the previously mentioned reasons and should be labeled as “Autopilot + human driver” as it requires driver attention at all times.

There’s no way to know how many accidents human drivers prevented during Autopilot mileage.

Electrek’s Take

Top comment by gary oblock

Tesla uses neural networks (NN) as a key part of their technology. They are just a series of big tables of numbers called layers. Each row in the table is multiplied by a list of numbers and summed together to get an output value. The outputs of each layer is fed into in the next layer as an input and so on until a final result which is the index of the largest largest value in the output of the last layer. Why am I explaining all this math? Well, it's to show there's no way to deterime how the the NN came up with the result of a child just ran out in front of my car verses a bag just blew out in front of my car. Now, to fix a problem with a NN you just create some new date that shows the problem. Then your rerun the algorithm to create the data in the layers. They call this training. Now of course the data used to train the NN is only an tiny bit of all the possible stituations seen in the real world. So in real world situations where the NN did the right thing can just suddenly stop woring after the fix. This is way a NN can't be relied on to always get critical things right in complex situations.

Again, I have to emphasize that this report only has value when you compare the Autopilot mileage against itself over time.

It’s also important to compare the same periods year-over-year as accidents are more common during the winter due to people driving more often after dark and in more difficult conditions.

Therefore, the only important thing that this report highlights is that Autopilot is getting worse.

Shouldn’t that be worrying? Shouldn’t Tesla address that instead of falsely claiming it means Autopilot is 10x, 9x safer than humans?

FTC: We use income earning auto affiliate links. More.

Comments