John Krafcik, the former CEO of Waymo, is doubling down on his criticism of Tesla’s self-driving strategy. In new comments, he is going after the hardware itself, specifically Tesla’s insistence on a “vision-only” approach.

One of the godfathers of autonomous driving argues that Tesla’s FSD has a “bad case of myopia.”

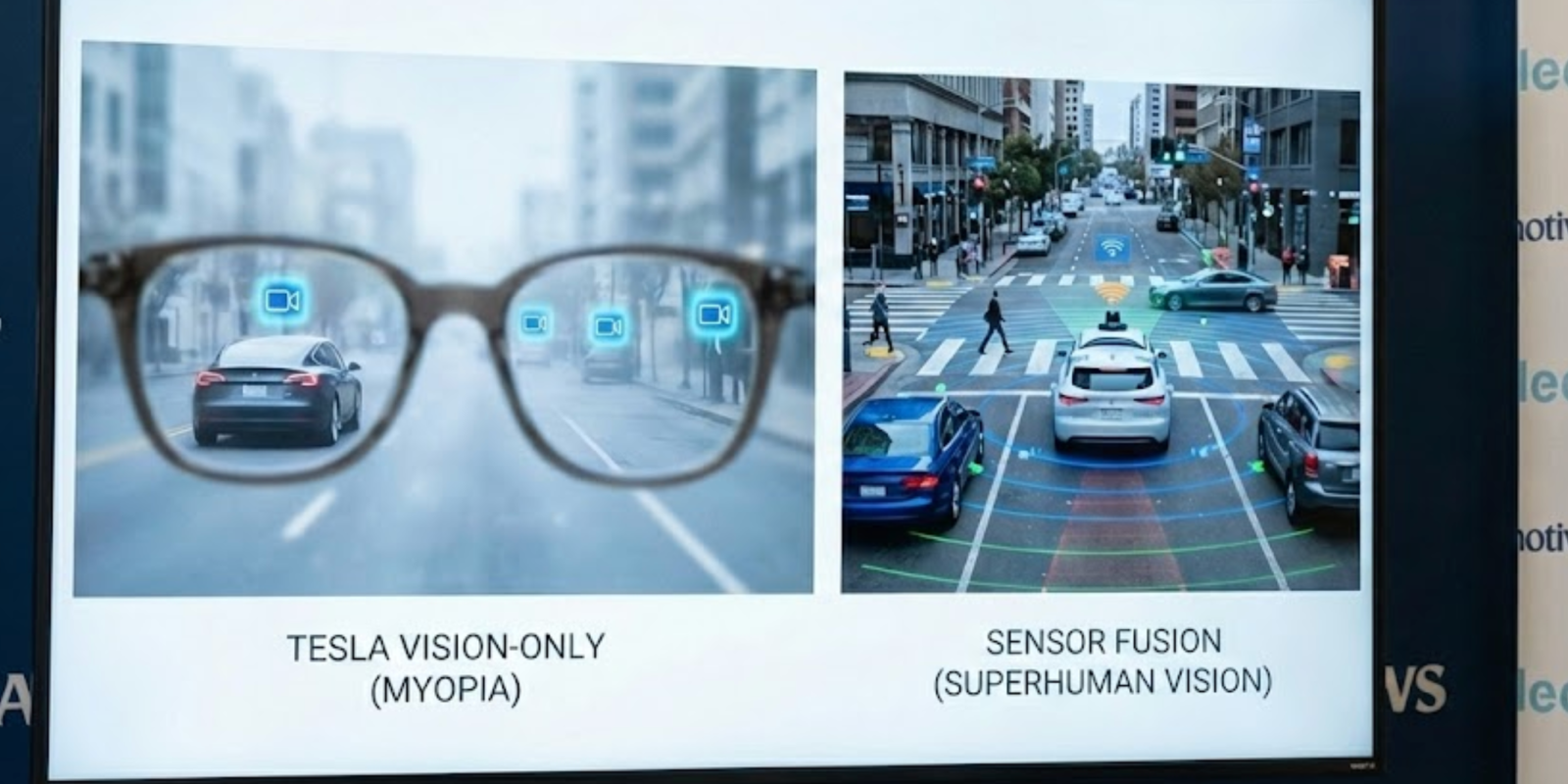

If you have been following the autonomous driving space, you know the debate: Elon Musk believes that since humans drive with eyes (cameras) and a brain (neural nets), cars should be able to do the same. Krafcik, along with the vast majority of the industry, believes that redundancy via LiDAR and radar is non-negotiable for safety.

In a new conversation with Automotive News surfacing from CES 2026, Krafcik didn’t mince words about Tesla’s sensor suite limitations:

Human vision is so much more capable than the vision of a car equipped with seven 5-megapixel cameras, only one of which is narrow-view, while all the others are wide-view. So you’re basically dispersing those 5 megapixels in a way that makes the actual effective vision more like 20/60 or 20/70. The rest of the cameras in a car like that wouldn’t even pass a DMV vision test

He argued that by removing radar and ultrasonic sensors (USS) years ago, and refusing to adopt LiDAR, Tesla has “handcuffed its AI” to a data stream that is inherently noisier and less reliable than what competitors like Waymo or Zoox are using.

Krafcic added:

And then you have LiDAR and radar providing completely different modalities of active sensing to complement the passive sensing from the cameras. That’s truly superhuman. With that level of data, you can do amazing things, as opposed to a car that has a really bad case of myopia, should be wearing glasses, and operates on a very limited data cycle.

We should probably take his warnings seriously. Krafcik has a track record of being right about Tesla’s self-driving efforts so far. For example, he specifically predicted the company would “fake” its Robotaxi milestones.

Back in early 2025, we reported that Krafcik warned Tesla would “fake” its Robotaxi launch, predicting they would simply mimic a working service.

He was spot on. When Tesla rolled out its pilot in Austin later that year, it was confirmed that the vehicles relied heavily on remote monitors and safety drivers, proving his theory that the system wasn’t ready for the generalized, unsupervised autonomy Musk promised.

Tesla CEO Elon Musk repeatedly said that safety drivers were just there for extra safety and would be removed a few months after the launch in June. 6 months later, in December, he said they would be removed within 3 weeks. We are now almost a month past this timeline, and Tesla hasn’t removed the safety monitor, which is a good thing considering Tesla’s Robotaxi has a high crash rate even with the safety drivers.

Now, Krafcik is suggesting that the hardware itself is the reason for that failure. He notes that without the precise depth perception of LiDAR or the velocity data from radar, Tesla’s “cameras-only” system struggles in edge cases, like blinding sunlight, heavy rain, or low-contrast environments—that wouldn’t phase a sensor-fused system.

Here’s the full interview:

Electrek’s Take

I’ve been driving Tesla vehicles with FSD for years, and while the progress is undeniable, there’s no evidence that Tesla can get to level 4 with the current hardware.

Tesla has consistently missed timelines to make its system unsupervised.

Top comment by Daniel

This premise of humans only use vision and neural processing for driving is fundamentally flawed. We use many other systems, such as our vestibular system, to drive. So even if we had camera systems that were as high fidelity as the human eye (not even a great eye in the animal kingdom), it still wouldn’t be a comparable system to what an actual human uses. Also, why wouldn’t you want more sensors to make the system BETTER than human, even if the vision only premise were true?

I have been using FSD as long as it has been available, and it still consistently tries to murder me every so often. I absolute cannot see this system hitting level 4. Ever.

Krafcik’s point about “physics vs. software” is the crux of the issue. Tesla is betting that if you throw enough compute and training data at video feeds, the car will “understand” the world perfectly. Krafcik is saying that no amount of compute can fix a camera that is blinded by the sun or covered in mud.

Given that Krafcik correctly called the “smoke and mirrors” nature of the Robotaxi launch last year, and he has decades of experience in this field, his hardware critique carries more weight.

It raises a tough question for Tesla owners: If Krafcik is right again, and “vision-only” is a dead end for Level 4 autonomy, real level 4 autonomy, not the smokes and mirror games Tesla plays, what happens to the millions of vehicles on the road today equipped with Hardware 3 and 4?

Elon has bet the farm on vision. Krafcik thinks he bet on the wrong horse. Considering Waymo is currently operating real robotaxis while Tesla is still “supervising,” the scoreboard currently favors Krafcik.

FTC: We use income earning auto affiliate links. More.

Comments