I just came back from driving about 200 miles (350 km) using Tesla’s (Supervised) Full Self-Driving, and the system is getting better, but it’s also getting more dangerous as it gets better.

The risk of complacency is scary.

Last weekend, I went on a road trip that covered about 200 miles from Shawinigan to Quebec City and back, and I used Tesla’s (Supervised) Full Self-Driving (FSD), v12.5.4.1 to be precise, on almost the entire trip.

Here’s the good and the bad, and the fact that the former is melting into the latter.

The Good

The system is increasingly starting to feel more natural. The way it handles merging, lane changes, and intersections feels less robotic and more like a human driver.

The new camera-based driver monitoring system is a massive upgrade from the steering wheel torque sensor that Tesla has used for years. I only had one issue with it where it kept giving me alerts to pay attention to the road even though I was doing just that, and it eventually shut FSD down for the drive because of it.

But this happened only once in the few weeks since I’ve used the latest update.

For the first time, I can get good chunks of city driving without any intervention or disengagement. It’s still far from perfect, but there’s a notable improvement.

It stopped to let pedestrians cross the street, it handled roundabouts fairly well, and it drives at more natural speeds on country roads (most of the time).

The system is getting good to the point that it can induce some dangerous complacency. More on that later.

As I have been saying for years, if Tesla was developing this technology in a vacuum and not selling it to the public as “about to become unsupervised self-driving”, most people would be impressed by it.

The Bad

Over those ~200 miles, I had five disengagements, including a few that were getting truly dangerous. It was seemingly about to run a red light once and a stop another time.

I say seemingly because it is getting hard to tell sometimes due to FSD often approaching intersections with stops and red traffic lights more aggressively.

It used to drive closer to how I’ve been driving my EVs forever, which consists of slowly decelerating using regenerative braking when approaching a stop. But this latest FSD update often maintains a higher speed, getting into those intersections and brakes more aggressively, often using mechanical brakes.

This is a strange behavior that I don’t like, but I started at least getting the feeling of it, which makes me somewhat confident that FSD would blow that red light and stop sign on those two occasions.

Another disengagement appeared to be due to sun glare in the front cameras. I am getting more of that this time of year as I drive more often during the sunsets, which happen earlier in the day.

It appears to be a real problem with Tesla’s current FSD configuration.

On top of the disengagement, I had an incalculable number of interventions. Interventions are when the driver has to input a command, but it’s not enough to disengage FSD. That’s mainly due to the fact that I keep having to activate my turn signal to tell the system to go back into the right lane after passing.

FSD only goes back into the right lane after passing if there’s a car coming close behind you in the left lane.

I’ve shared this finding on X, and I was disappointed by the response I got. I suspected that this could be due to American drivers being an important part of the training data, and no offense as this is an issue everywhere, but American drivers tend not to respect the guidelines (and law in some places) of the left lane being only for passing on average.

I feel like this could be an easy fix or at the very least, an option to add to the system for those who want to be good drivers even when FSD is active.

I also had an intervention where I had to press the accelerator pedal to tell FSD to turn left on a flashing green light, which it was hesitating to do as I was holding up traffic behind me.

Electrek’s Take

The scariest part for me is that FSD is getting good. If I take someone with no experience with FSD and take them on a short 10-15 mile drive, there’s a good chance I get no intervention, and they come out really impressed.

It is the same with a regular Tesla driver who consistently gets good FSD experiences.

This can build complacency with the drivers and result in paying less attention.

Fortunately, the new driver monitoring system can greatly help with that since it tracks driver attention, unlike Tesla’s previous system. However, it only takes a second of not paying attention to get into an accident, and the system allows you that second of inattention.

Furthermore, the system is getting so good at handling intersections that even if you are paying attention, you might end up blowing through a red light or stop sign, as I have mentioned above. You might feel confident that FSD is going to stop, but with its more aggressive approach to the intersection, you let it go even though it doesn’t start braking as soon as you would like it to, and then before you know it, it doesn’t brake at all.

There’s a four-way stop near my place on the south shore of Montreal that I’ve driven through many times with FSD without issue and yet, FSD v12.5.4 was seemingly about to blow right past it the other day.

Again, it’s possible that it was just braking late, but it was way too late for me to feel comfortable.

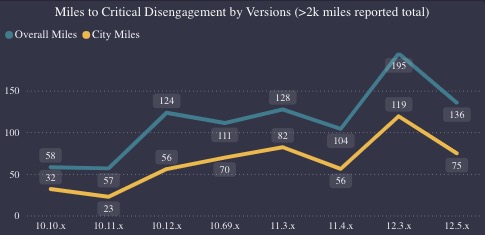

Also, while it is getting better, and better at a more noticeable pace lately, the crowdsource data, which is the only data available as Tesla refuses to release any, points to FSD being still years away from being capable of unsupervised self-driving:

Tesla would need about a 1,000x improvement in miles between disengagement.

I’ve lost a lot of faith in Tesla getting there due to things like the company’s recent claim that it completed its September goals for FSD, which included a “3x improvement in miles between critical disengagement” without any evidence that this happened.

Top comment by Jethro

Tesla gave us a second free month of FSD, so I thought I would try it out... Picture this: Sunday morning, beautiful weather, a long straight suburban road all to ourselves. Not a car in sight. I'm already going straight at the 30mph speed limit, so all it has to do is continue straight ahead. I enable FSD. Within 15 seconds the car slams on the brakes for no reason. My wife gives me the look. I turn off FSD. Good job, Tesla.

In fact, the crowdsource data shows a regression on that front between v12.3 and v12.5.

I fear that Elon Musk’s attitude and repeated claim that FSD is incredible, combined with the fact that it actually getting better and his minions are raving about it, could lead to dangerous complacency.

Let’s be honest. Accidents with FSD are inevitable, but I think Tesla could do more to reduce the risk – mainly by being more realistic about what it is accomplishing here.

It is developing a really impressive vision-based ADAS system, but it is nowhere near on the verge of becoming unsupervised self-driving.

FTC: We use income earning auto affiliate links. More.

Comments