I finally got a chance to test out Tesla Full Self-Driving (supervised) v12.5 myself, and my first impression is that the system drives more naturally, but it is still dangerous.

Vigilance is paramount.

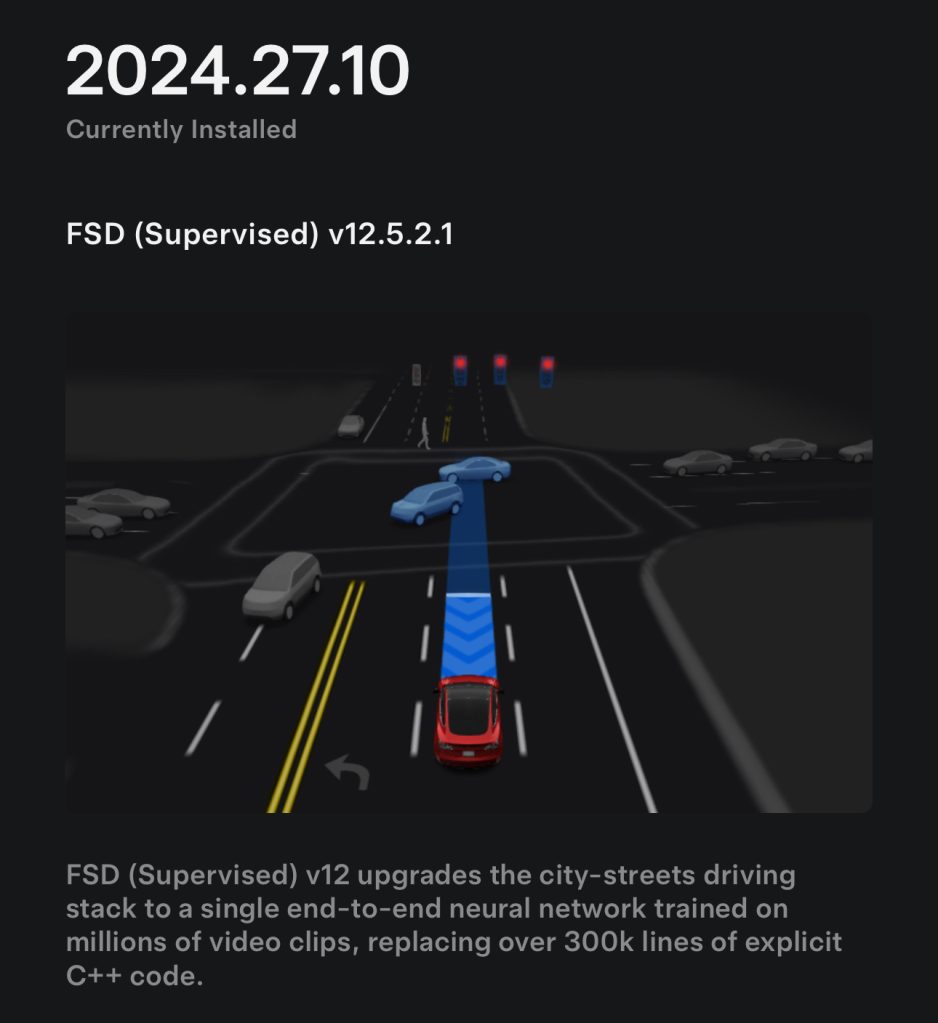

Yesterday, I finally received a software update on my Tesla Model 3 that read ‘v12.5’ (12.5.2.1, to be exact).

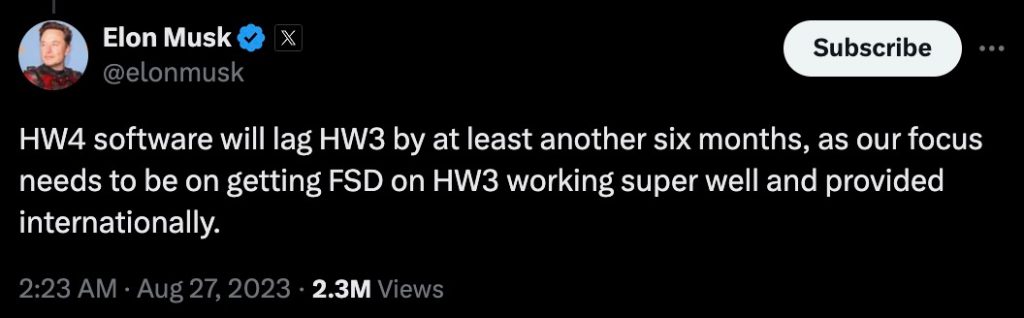

I had been waiting for it for a while. You see, call me naive, but when Tesla CEO Elon Musk said last year that FSD updates on HW4 (newer Tesla vehicles) would lag about 6 months behind HW3 (older Tesla vehicles), I believed him:

It made sense. He promised unsupervised self-driving on millions of HW3 vehicles for years. It makes sense that Tesla focuses on delivering on the promise for these older vehicles with less computing power before starting to utilize the higher computing power on newer HW4 vehicles.

However, that didn’t happen.

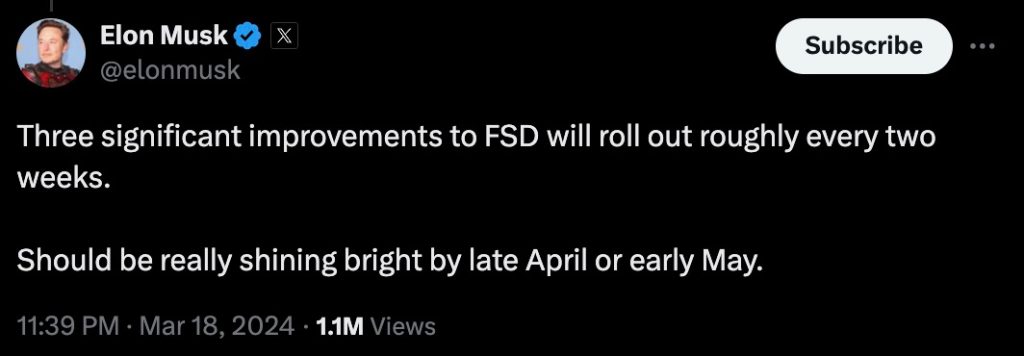

Earlier this year, Musk promised significant improvements to FSD rolling every two weeks:

But I got FSD v12.3.6 on HW3 Tesla Model 3 at the time and didn’t receive any more FSD update for 6 months.

Instead, Musk admitted that Tesla now needs to optimize its FSD code to work on older HW3 vehicles, and HW4 vehicles are getting more updates sooner – signaling Tesla is reaching the limits of the hardware on older vehicles despite promises made.

While this situation has greatly reduce my hopes of Tesla ever delivering on its promise of achieving self-driving on millions of vehicles sold since 2016, I was still excited to see this in the release notes on my car after an update this week:

Yesterday, I had to drive from Shawinigan to Trois-Rivieres (25 miles) and back. It was a good opportunity to test out the system on both surface streets and highways.

My first impression is that there are significant improvements in driving comfort. It drives way more naturally; the auto speed mode feature helps a lot. It drives at a speed that makes sense for the road rather than sticking to the speed limits plus or minus what you tell it.

The new driver monitoring system, which is vision-based rather than sending alerts asking you to tug on the wheels even though you are holding it, also helps make the system feel more natural.

Now, if you don’t look ahead to the road for a few seconds, you get an alert on the screen to pay attention to the road, which goes away if you bring your attention back to it or give inputs to the steering wheel.

These were the main changes. I didn’t see a significant improvement in performance, but the system is at least more enjoyable to use now. It makes using Tesla FSD less like a job and more like a feature, which is a welcomed change after more than two years of “FSD Beta” and now “Supervised FSD”.

I had to disengage the system as I was approaching my destination because the car insisted on going into the right lane when I needed to be on the left to stop. This was a minor annoyance, but a disengagement nonetheless.

On the way back, the car slowed down from my set speed on the highway for seemingly no reason. It is a common problem with Tesla FSD that I had in almost every iteration of the system. it can be dangerous on highways, but this time, it only slowed down by about 5-8 km/h and went back to the set speed in a few seconds without having to disengage.

However, shortly after, I had a more significant issue that resulted in a critical disengagement.

I had to make a left turn here:

A car was coming from the left, but it was pretty far and not coming fast. I would have done the turn without hesitation, but I wasn’t sure if FSD, which is often on the cautious side in those turns, would or if it would wait for the car to pass.

It decided to go, but it was almost as if it changed its mind a third of the way into the turn as it stopped, or at the least decelerated greatly into the turn; it’s hard to tell cause I had to react fast.

The front of the car was already in the way, so I had to take over and complete the turn faster to make sure the upcoming car didn’t crash into me.

Up until that point, I was pretty impressed by FSD v12.5. It’s a good reminder that as the tech improves and feels better, especially more natural like this update, it is super important to remain hyper-vigilant. The smoothness of this update can induce overconfidence, but it is still prone to errors, as I was quickly reminded.

Electrek’s Take

Top comment by Mark Yates

I see 2 big problems.

HW3 has cameras of 720p vertical resolution vs 1500aprox for HW4 - so 1/2 the pixels vertically (and horizontally). Makes it more difficult to recognise far off cars and then also judge their speed.

The wide angle field of view camera (which is not recorded into the dashcam and should be!) is too low resolution and too narrow to see left/right at 80 degrees to way car is facing (needed at these T junctions) - hence it guesses when it's safe to emerge (nothing visible in the imediate 150 degrees that it thinks is a car) - then reacts when it realises there IS a car coming! HW3 camera is too low resolution to see far off cars clearly and get the speed right!

While I am impressed and I think it is an important step as FSD is now starting to feel like an actual feature rather than just homework for Tesla customers to train a system that Tesla sold to us years ago, I still find it hard to see a path from this to unsupervised self-driving, especially on HW3 cars.

Obviously, this was just my first drive, and I need to spend a bit more time with the system for a full review, but I had two disengagements, including a critical one, in about 50 miles. We are still very far from unsupervised.

My main fear is that as the system feels better, like with this update, more people will start getting complacent with it, which could lead to more accidents. I am hoping that Tesla’s new driver monitoring system will counter that potential complacency.

What do you think? Let us know in the comment section below.

FTC: We use income earning auto affiliate links. More.

Comments