A Tesla driver was arrested for vehicular homicide after he ran over a motorcyclist while driving using Autopilot without paying attention.

The accident happened in Snohomish county, Washington, on April 19.

The local news reported on the accident based on the police report:

The man, 56, had activated Tesla’s Autopilot feature. He was using his phone when he heard a bang as his car lurched forward and crashed into the motorcycle in front of him, troopers wrote.

The motorcyclist, 28-year-old Jeffrey Nissen, was sadly pronounced dead at the scene.

The police conducted a field sobriety test with the Tesla driver, whose name hasn’t been released. The drive admitted to having consumed an alcoholic beverage earlier that day, but he was not found to be impaired at the time.

However, he did admit to being on his phone and not paying attention to the road during the accident.

The state troopers wrote in their report that the driver’s inattention on Autopilot, “putting the trust in the machine to drive for him,” gave them probable cause to arrest him.

He was arrested for ‘Vehicular Homicide’ and posted $100,000 bail.

It’s unclear which version of Autopilot, which is now intricately linked to Tesla’s Full Self-Driving since it uses the same software stack, the driver was using, but he was driving a 2022 Model S.

Electrek’s Take

Please pay attention to the road when using Tesla’s ADAS features. It’s literally a matter of life or death as this accident unfortunately shows, especially with cycling and motorcycling season starting.

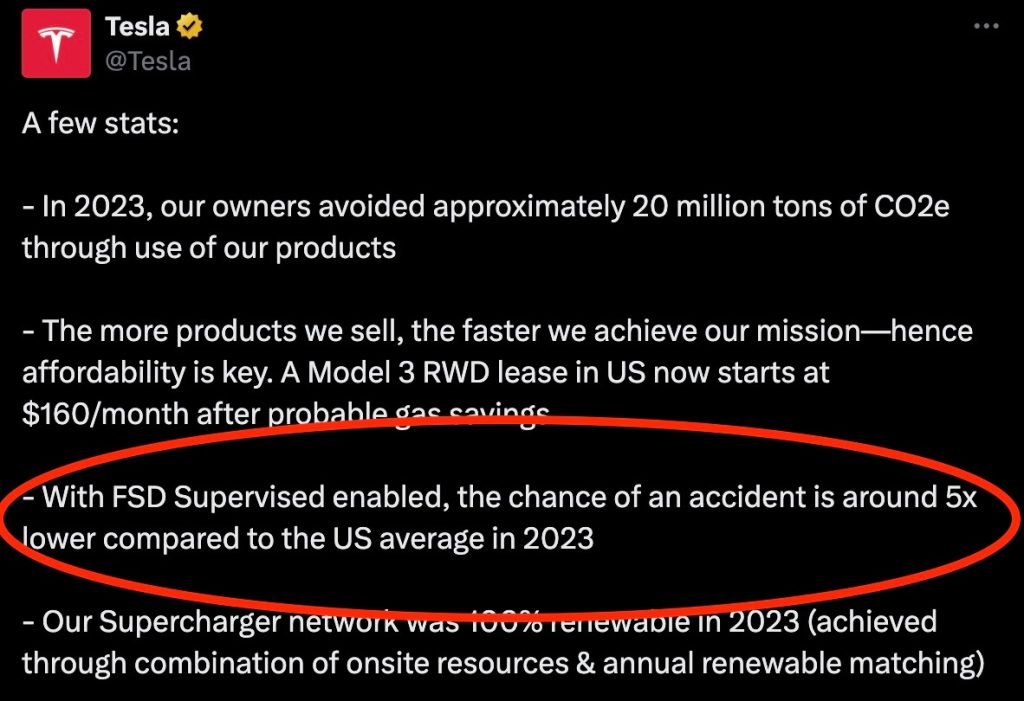

I know some people get confused by Tesla’s propaganda. Even though the automaker clearly asks drivers to keep their hands on the steering wheel and pay attention at all times, Tesla also called its system Full Self-Driving and says things like this:

Top comment by HansZuDemFranz

"It’s unclear which version of Autopilot, which is now intricately linked to Tesla’s Full Self-Driving since it uses the same software stack" - Autopilot definitely doesn't use the same software stack as FSD.

However, the only reason it is safer than the US average is that it is supervised by drivers who ideally pay extra attention when using FSD.

Without this attention, I can almost guarantee that it would get into way more accidents. At least for now.

Now, there’s no doubt that the driver is responsible here as he abused the ADAS, but there’s also the question: is Tesla doing enough to prevent abuse of Autopilot and Full Self-Driving?

That was the question in the recent lawsuit in California of another fatal Tesla Autopilot accident that the automaker ended up settling with the family of the deceased.

FTC: We use income earning auto affiliate links. More.

Comments