The Tesla Autopilot safety probe is about to end, and NHTSA is hinting at forcing improvements on the driver monitoring side.

Tesla has been under federal investigation about its Autopilot potentially having a problem with crashes with emergency vehicles on the side of the road.

The US National Highway Traffic Safety Administration (NHTSA) first opened an investigation into Tesla Autopilot over its possible involvement in 11 crashes with emergency and first responder vehicles back in 2021. It has since ramped up the investigation to include 16 crashes.

Acting NHTSA Administrator Ann Carlson told Reuters that a resolution to the probe is coming “relatively soon.”

Carlson added:

It’s really important that drivers pay attention. It’s also really important that driver monitoring systems take into account that humans over-trust technology.

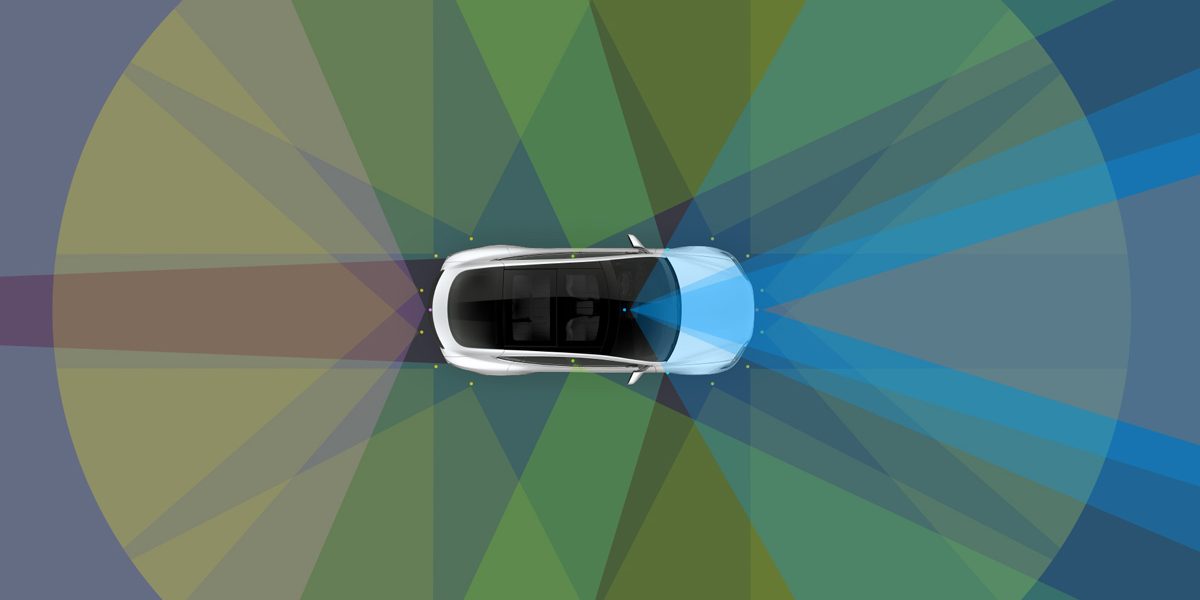

Tesla has often been criticized for its driver monitoring system, which used to be limited to a torque sensor on the steering wheel.

The automaker would tell drivers to “keep their hands on the steering wheel,” but it wouldn’t even be able to detect whether or not the driver had their hands on the wheel because it could only detect when torque was applied.

Tesla changed the warning to “apply torque on the steering wheel” to be more precise.

More recently, Tesla’s driver monitoring system has greatly improved by using the driver-facing camera to detect when drivers are not paying attention to the road.

Electrek’s Take

Top comment by M Hovis

I am good with following any requirements NHTSA sets. Their job is to keep the highways safe. It is worth noting the difference between autopilot, which is in question, and FSD. They are two different products that people often confuse. Autopilot is basically adaptive cruise control and lane keep. If emergency stopping is added, and I think they are, then NHTSA has a LOT of inquiries on a LOT of auto manufacturers working to perfect this technology.

I used both autopilot and FSD since its launch. I have never lost my privilege to use it due to five strikes. I have had three strikes total in over four years. The random slowdowns were so bad at one point that I barely tested between versions. The current version, however, is quite good. I now test how far I can go without a single failure. I have made multiple 15-mile trips in rush hour traffic without a failure. Most impressive is it is driving more like a human. It is changing lanes more than I would, but following my speed settings. Sometimes, it brakes harder than I think it should, but it does the same as other humans in rush hour traffic. It is still struggling with road construction. Sometimes, it works great, and other times not.

We are an impatient species. Everyone wants to complain that the unsolved task still hasn't arrived. Musk and Google have both admitted that the task was more daunting than originally anticipated. We are getting there. It will now, though shaky, drive an unmarked gravel road.

I think this will be another “big Tesla recall” that will be announced, even though the “fix” will already be pushed to the entire fleet by the time it’s announced.

It’s true that Tesla used to be lacking in the driver monitoring department, but it introduced those new camera-based monitoring features in FSD Beta, and now it seems to be expanding them to Autopilot.

Speaking for myself, they are working pretty well. Whenever I get distracted, I quickly get an alert.

I don’t see how Tesla can do better than this right now. Ultimately, with the current system, it is still the driver’s responsibility to pay attention at all times. Tesla’s requirement is only to build systems to help make sure that drivers are paying attention.

FTC: We use income earning auto affiliate links. More.

Comments